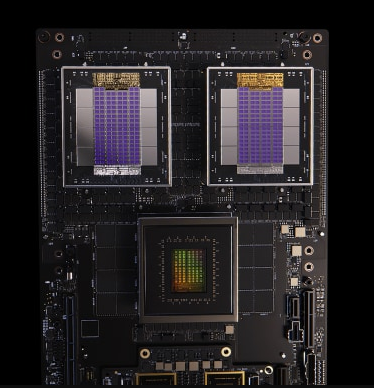

NVIDIA has officially entered a new phase of collaboration with ARM by releasing the NVLink Fusion for the entire line Neoversepaving the way for computing racks in which CPUs, GPUs and accelerators exchange data with more coherence and high bandwidth.

The decision expands the ecosystem created with Grace Blackwell and aims to reconfigure the infrastructure used by large cloud providers and research centers.

The move expands connectivity between processing units by allowing manufacturers to use NVLink Fusion to integrate your own ARM-based CPU designs with adrenaline GPUs and other compatible accelerators.

Instead of relying on traditional bandwidth- and latency-limited buses, the new approach creates a unified rack environmentwhere the data flow circulates more efficiently between the chips.

The technology operates in conjunction with the AMBA CHI C2Ca communication protocol created by ARM to deliver coherence between components. Compatibility reduces integration effort and simplifies the development of SoCs ready for large-scale AI payloads.

Those designing their own ARM chips or using ARM IP can now adopt NVLink Fusion to connect those CPUs directly to GPUs or the rest of the NVLink ecosystem, and this is already happening in rack infrastructure and scale-up systems

Ian BuckVice President of Accelerated Computing at NVIDIA

Neoverse gains ground on hyperscalers

ARM projects that the family Neoverse reach half of the hyperscaler market by the end of 2025.

Internal platforms from AWS, Google, Microsoft, Oracle and Meta already use architectures derived from Neoverse to run AI, data science and large cloud services workloads.

The arrival of NVLink Fusion fits into this scenario by trying to alleviate memory and bandwidth bottlenecks that have become more evident with the growth of generative models. The greater the volume of parameters and the number of connected GPUs, the greater the dependence on robust interconnections.

How the combination of ARM and NVIDIA affects the AI ecosystem

For the sector, the interest is less in promoting a proprietary standard and more in enabling heterogeneous systemswhere each company can choose their preferred accelerators without losing communication capacity between chips.

NVLink Fusion maintains data coherence between processors, accelerates inference, reduces latency in exchanges between GPU and CPU, and reduces memory copy steps.

In Data Centers with pressure for energy efficiency, any reduction in data movement translates into less heat, lower operating costs and greater density per rack.

Additionally, support for NVLink Fusion aligns with family expansion Grace Blackwell and trends seen in recent supercomputers, which adopt unified architectures to train models with trillions of tokens.

Consequences for manufacturers and the next generation of servers

The compatibility offered by ARM allows SoC manufacturers to build boards and entire systems with coherent communication between Neoverse CPUs and accelerators, something that was previously restricted to proprietary platforms.

This should reduce development time, accelerate customized projects and encourage new combinations between chips from different suppliers.

The industry’s expectation is that the adoption of NVLink Fusion will produce more compact machines, ready for computational physics workloads, embedded AI, industrial simulations and distributed cloud processing.

New era in Data Center architecture

The partnership marks a consistent expansion of NVIDIA’s strategy of connecting more and more components into a single computing plane.

Instead of relying exclusively on GPUs to absorb all the steps of AI, the company is betting on a mesh of chips integrated by coherent interconnectioncapable of supporting larger networks, broader models and hybrid workflows.

At the same time, ARM positions Neoverse as the foundation of a flexible, interoperable ecosystem for those designing cutting-edge infrastructure.

The sum of the two visions creates an environment in which bandwidth and coherence are no longer limitations and begin to support the next wave of Data Centers.

Also read:

Where can this evolution take us?

The arrival of NVLink Fusion at Neoverse indicates that the convergence between architectures will be decisive in the next AI cycles.

In an industry where the volume of data grows faster than manufacturing capacity, interconnection becomes the element that defines how far it is possible to scale.

The expected result is a more modular, more efficient environment capable of absorbing increasingly larger experiments without wasting energy or blocking workflows. It is in this field that the foundations for scientific models, advanced robotics, industrial simulations and applications that require unified computing emerge.

Source: NVIDIA

Join the Adrenaline offer group

Check out the main offers on hardware, components and other electronics that we found online. Video card, motherboard, RAM memory and everything you need to build your PC. By joining our group, you receive daily promotions and have early access to discount coupons.

Join the group and take advantage of promotions

Source: https://www.adrenaline.com.br/hardware/nvidia-arm-nvlink-fusion-neoverse-parceria-data-centers-ia/