The Discount, a startup supported by Nvidia, announced Elastic Ai Memory Fabric System (Emascas), which you can add terabytes of ddr5 memory to any server using an ethernet connection.

The Ethernet memory system is designed for large -scale inference workloads and is currently being tested with selected customers.

This is another NVIDIA investment in the hardware industry for artificial intelligence. The central problem is that RAM’s ability tends to be a bottleneck to numerous AI applications, but adding memory to systems is sometimes not possible or complicated.

In the end, There is a limit to how much it is possible to create a larger server.

According to the company, Technology can reduce AI costs by up to 50% per token generated. And how tokens generation tasks can be distributed among the servers more evenly, It will be possible to eliminate bottlenecks.

Related News:

System Characteristics

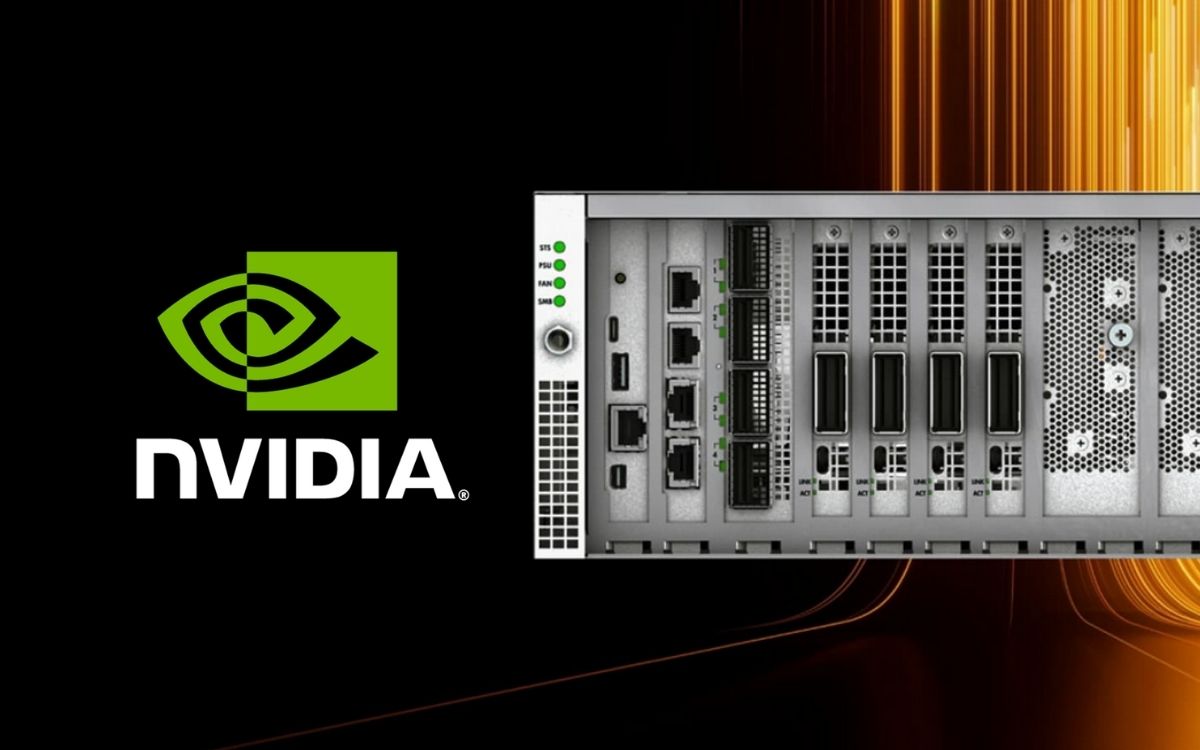

The Emasys System of the Distcountry is Compatible with rack based on the company’s SuperNIC ACF-S network card. With one 3.2 tb/s transfer rate (400 gb/s), it connects up to 18 tb of ddr5 memory with cxl at the top.

The memory can be accessed by 4 and 8 -way GPU servers via standard 400g or 800g ethernet ports using direct memory remote access via Ethernet. That is, it is possible to add an emfasys system to virtually any AI server without problems.

The data move the GPU servers and the Emasys memory pool using RDMA. This allows Memory access with zero copy and low latency (measured in microsecond) without CPU intervention, using the CXL.mem protocol.

EMFASYS aims to meet growing memory requirements in modern IAS that use very long prompts, large context windows or multiple agents. These workloads put significant pressure on HBM memories connected to the GPU, which is limited and expensive.

Using an external memory pool, Data Center operators can expand the memory of an individual AI server with flexibility.

What is specific?

To access the emfasys memory pool, servers require memory layers software. The function of the software is to mask transfer delays, among other things, and it is supplied or enabled by the tinker.

This software is executed in existing hardware and operating system environments and is based on widely adopted RDMA interfaces. This means that system deployment is easy and does not require major architectural changes.

Using Emfasys Memory Pool, AMI server owners increase efficiency, as computational resources are best used, expensive GPU memory is not wasted and general infrastructure costs can be reduced.

Availability forecast

The AI Emascas System and the 3.2 TB/s Chip Supernic Chip are currently in testing with selected customers. It is unclear when the overall availability is foreseen if there will be.

Availability, per hour, also depends on the results in the tests, but the company’s website allows you to try an order.

For its part, the Discount actively acts as an advisory member of the Ultra Ethernet Consortium (UEC) and contributes to the Ultra Accelerator Link (Ualink) consortium, which suggests that it should not simply disappear from the map.

Source: Distinguished.

Join the Adrenaline offers group

Check out the main offers of hardware, components and other electronics we find over the internet. Video card, motherboard, RAM and everything you need to set up your PC. By participating in our group, you receive daily promotions and have early access to discount coupons.

Enter the group and enjoy the promotions

Source: https://www.adrenaline.com.br/hardware/nova-tecnologia-apoiada-pela-nvidia-promete-reduzir-custos-de-ia-em-ate-50-com-memoria-ddr5-via-ethernet/