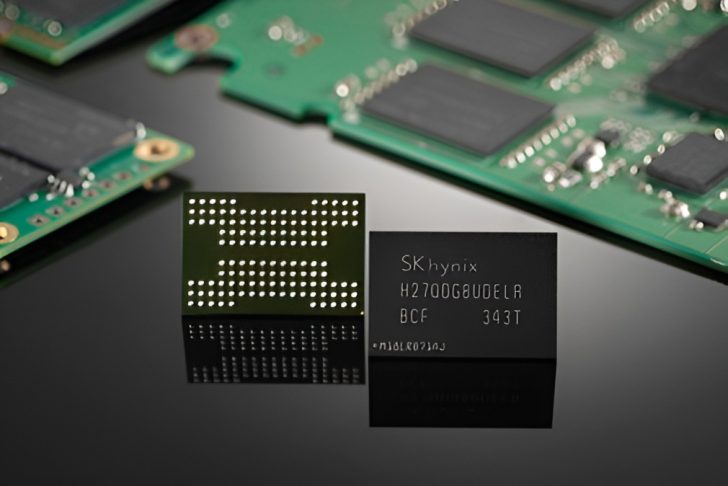

NVIDIA and SK hynix are working together to develop a new type of SSD specifically targeted at inference in artificial intelligencea movement that could redesign the role of storage in Data Centers and go from orange to red alert in the global storage market. NAND memory.

The project, known internally as Storage Nextseeks to obtain a significant leap in performance in input and output operations, with numbers that largely surpass current corporate SSDs.

The initiative comes at a delicate time when the focus of the AI industry begins to migrate from model training to large-scale inference, demanding architectures capable of handling massive volumes of parameters, reduced latency and more rigorous energy efficiency.

What is “AI SSD” and why does it exist?

According to information released by the South Korean press, the so-called AI SSD is a storage device based on NAND Flashbut designed from the ground up to meet access patterns typical of large model inference.

Unlike traditional SSDs, which prioritize general use in servers and corporate systems, this new category seeks to act as a type of middle layer between memory and storage.

The most cited technical goal is ambitious: up to 100 million IOPSa level around ten times higher to that seen in enterprise SSDs widely used today.

Performance would be achieved through a combination of dedicated driversextreme parallelism and closer integration with AI accelerators, especially NVIDIA GPUs.

Inference changes the logic of AI infrastructure

For years, the discussion around AI infrastructure has centered around GPUs, HBM, and DRAM. But with the popularization of increasingly large models and the growth of commercial use of inference, this balance is beginning to shift.

In real applications, models need to continuously access large volumes of data and parameters, something that exceeds the economic and physical capacity of traditional memories.

It is from this perspective that the AI SSD is being conceived: it would function as a pseudo-memory levelslower than DRAM or HBM, but much more dense, scalable and energy efficient. The proposal is to reduce I/O bottlenecks and reduce the time that GPUs spend idle waiting for data.

Strategic collaboration between NVIDIA and SK hynix

The partnership between NVIDIA and SK hynix has strong roots: the South Korean manufacturer is already one of the main global suppliers of DRAM, NAND e HBMwhile NVIDIA dominates the hardware and software ecosystem for AI.

Reports indicate that SK hynix is expected to present a functional prototype by the end of 2026with commercial maturity expected around 2027.

NAND architectures and controllers capable of directly meeting data center-scale AI inference requirements are being evaluated

Growing pressure on NAND supply

The main disadvantage of this advance is the concrete concern of inputs that affect the NAND Flash availability chain. Demand for memory is already under severe strain due to the expansion of data centers, cloud services and AI applications.

If AI SSDs become standard in inference environments, NAND consumption could accelerate in a similar way to what happened with DRAM and HBM in recent years, changing the balance of supply and demand for consumer electronics.

Recent industry data indicates that a large part of SK hynix’s production capacity for DRAM, NAND and HBM are already committed until 2026which contributes to the increase in contractual prices.

Analysts point out that NAND may face a more severe shortage cycle, affecting manufacturers of conventional SSDs and the consumer market.

How this can weigh on your pocket

Intense demand for memory chips is already having tangible effects on the consumer technology market.

The prices of DRAM and NAND Flash memorywhich make up most of the cost of RAM modules and SSDs, have risen significantly in recent monthsa trend that analysts directly associate with the lower availability of these components in traditional production lines.

Recent reports from TrendForce indicate that NAND contracts may have seen accumulated increases in two hundred percent or more since the beginning of 2025with much of this elevation occurring in a short space of time as vendors prioritize enterprise customers and data centers.

This has practical effects for anyone looking to build or upgrade a computer, change an SSD or buy a new laptop.

Studies indicate that PC and notebook manufacturers are already adjusting their prices to reflect the higher cost of memory and storage in final products: price increases on components, more modest base configurations and even portfolio revisions are being discussed to balance margins.

Consumers may therefore notice that devices with higher specifications (higher RAM or higher SSD storage capacity) are becoming more expensive or available in smaller quantitiesand that the traditional drop in prices over time could be delayed by several quartersextending the trend of high values until at least 2027.

Also read:

Change in hardware hierarchy

The development of AI SSD is revealing for the hardware hierarchy in the coming months: storage stops being a secondary component and starts to directly influence performance, costs and scalability.

By bringing NAND closer to the world of inference, NVIDIA and SK hynix signal that the future of AI will depend as much on efficient data flows how much raw computing power.

The movement also indicates that bottlenecks previously considered acceptable are becoming strategic obstacles in a scenario of increasingly larger models and real-time applications.

In other words, the pace of AI from 2026 onwards may depend less on how many GPUs there are in the world and more on how many bits of NAND make it out of factories.

Source: SDX Central

Source: https://www.adrenaline.com.br/hardware/novo-ai-ssd-nvidia-sk-hynix-mercado-nand-preco-subindo/