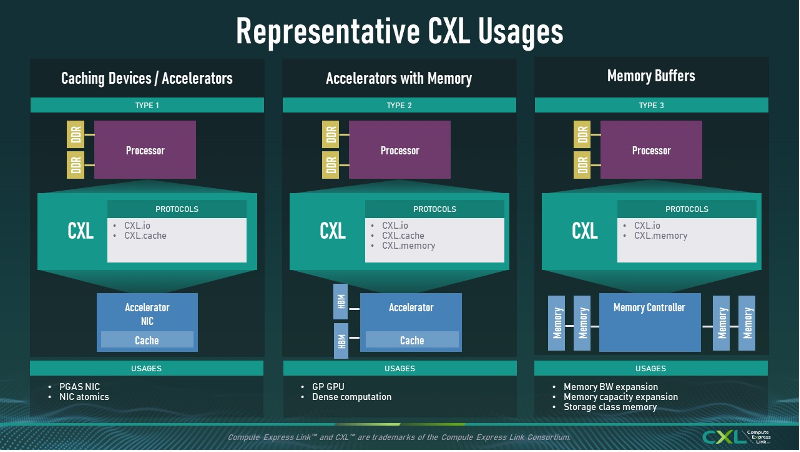

The new generation of Compute Express Link will usher in significant progress in the memory interconnect ecosystem, doubling the effective bandwidth and migrating to the PCIe 7.0 standard, which now becomes the mandatory basis for the specification.

The move alters hardware planning for Data Centers and accelerates the race for platforms capable of handling large-scale AI and heterogeneous computing loads.

What is CXL for in practice?

O CXL (Compute Express Link) was created to solve a classic bottleneck in modern systems: the difficulty of different components exchanging data quickly and coherently, without duplicating memory and without relying solely on the CPU as a mediator.

How the servers came to combine CPUs, GPUs, AI accelerators, DPUs, FPGAs and memory layers in multiple form factorsthe data flow needs to be more efficient than that provided by traditional interconnections.

In essence, CXL acts as a high-speed bridge with memory coherenceallowing multiple devices to access and share the same memory space without unnecessary copies. This reduces latency, improves total available memory usage, and enables more flexible architectures.

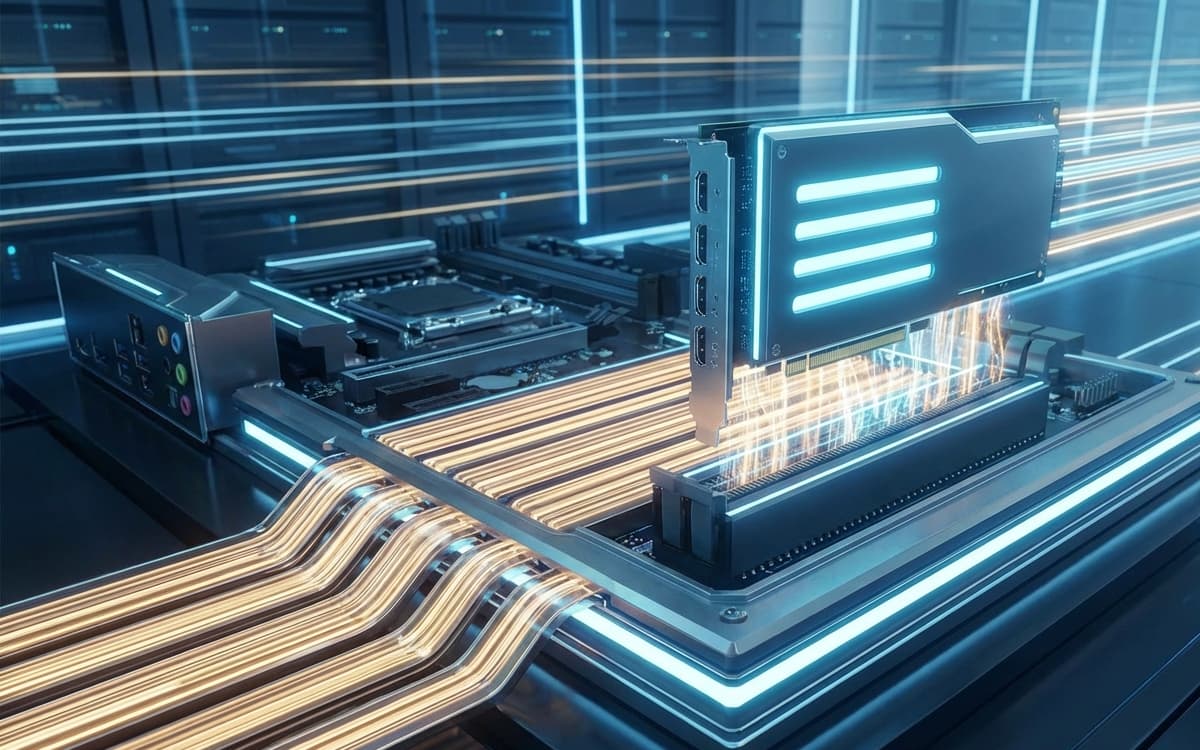

The standard was designed for three main functions:

1. Memory expansion without changing the CPU

With CXL, you can add external memory modules that connect directly to the CPU or a CXL switch. This creates an expandable “pool” that can be redistributed depending on the load.

Thus, Data Centers gain the ability to dynamically adjust memory, avoiding waste and reducing operational costs.

2. Memory sharing between multiple devices

CXL’s big turning point is in allowing AI accelerators, GPUs, and other devices access the same memory region coherentlysomething that eliminates the work of copying data between different buffers.

In AI applications, for example, this helps large models to be processed by multiple chips simultaneously, with more fluid communication.

3. Coherent interconnection for heterogeneous computing

Modern servers rarely work on CPU alone. The tendency is for intensive tasks to migrate to specialized accelerators.

CXL creates a common interconnection basis for these components to act as parts of a single logical system. This is especially relevant in:

- AI model training and inference

- HPC (high performance computing)

- elastic memory banks

- in-memory databases

- highly parallel workloads

Thanks to built-in coherence, all devices maintain a synchronized view of data, without disputes or inconsistencies.

Why has this become so important now?

With the growth of generative AI, increasingly larger models require extensive amounts of accessible memory.

The current strategy of stacking DRAM modules in the same socket is reaching its physical and thermal limits. CXL appears as a scalable alternative, allowing you to build servers with:

- disaggregated memoryshared between racks

- flexible topologiesusing switches and additional fabric levels

- more bandwidth per linknow expanded with CXL 4.0

- controlled latencyeven with multiple devices in the path

This way, we will have an architecture ready to handle massive processing loads, without requiring complete changes to the CPU design.

CXL 4.0 changes the bar for bandwidth

The CXL Consortium has formally published the specification CXL 4.0which goes from 64 GT/s para 128 GT/srelying directly on the interface PCIe 7.0 as a physical layer.

This represents the biggest leap since the creation of the standard and consolidates CXL as the central axis of architectures that combine CPUs, GPUs, accelerators and varied memory layers.

According to the official document, the evolution maintains the flit format of 256 Bytesadds native mode x2makes it possible four retimers per channel and delivers relevant RAS improvements, such as broader error visibility and advanced maintenance mechanisms.

The release of CXL 4.0 is part of a new stage in coherent memory bridging, expanding bandwidth and preserving compatibility with previous generations, while preparing the industry for new usage models

Derek Rohde, consortium president

PCIe 7.0 becomes the mandatory core

The migration from CXL to PCIe 7.0 is not symbolic. In practice, it confirms that the ecosystem of servers, motherboards, accelerators and controllers will have to move towards a platform completely aligned with the new bus. The pattern therefore becomes prerequisite for any real implementation of CXL 4.0.

The dependency directly impacts hardware vendors who are still stabilizing products with PCIe 5.0 and planning the arrival of PCIe 6.0.

The new specification indicates that the adoption window may accelerate, especially in segments such as generative AI, HPC and disaggregated memory banks.

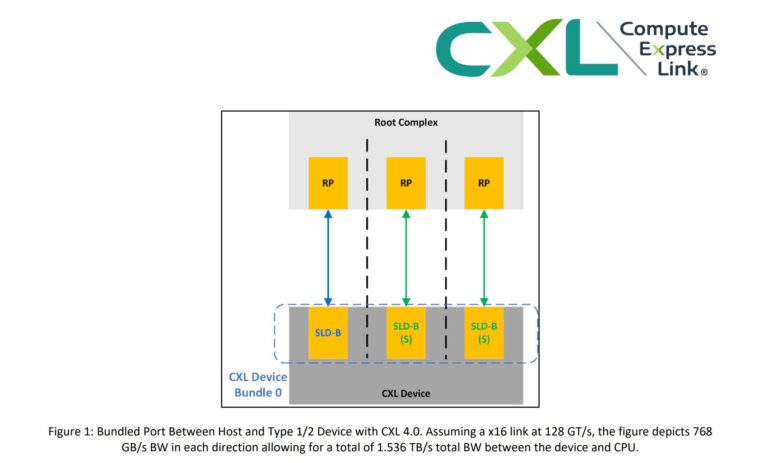

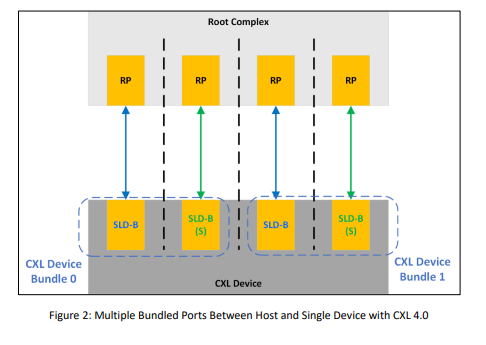

Bundled Ports create new possibilities between hosts and accelerators

One of the most talked about features of CXL 4.0 is the Bundled Portwhich allows combining two physical links to increase the effective bandwidth between host and type 1/2 devices. This makes it easier to use AI accelerators, DPUs, and hybrid cards that need to transmit large volumes of data with controlled latency.

The functionality also signals a closer relationship between CXL and interconnection architectures that work with link aggregation, something critical for modulated systems and servers optimized for parallel workloads.

CXL 4.0 advances RAS capabilities by making maintenance more granular, enabling operations such as:

- more efficient fault correction and isolation

- host-initiated repairs at boot

- more flexible memory sparing options

- greater visibility in diagnosing inconsistencies

The additions reinforce the concept of shared and expandable memoryreducing bottlenecks in pooling or tiering scenarios.

Compatibility remains preserved

Despite the move to PCIe 7.0, the consortium maintained Full compatibility with CXL 1.x, 2.0 and 3.xsomething essential for manufacturers who depend on long development cycles.

The strategy preserves investments from previous generations and facilitates combinations of devices, retimers and switches in hybrid Data Centers, typical of large cloud providers.

Technical comparison: evolution of CXL

Based on the data displayed by VideoCardz and the official CXL Consortium document, the evolution between versions can be summarized as follows:

| Category | CXL 1.1 | CXL 2.0 | CXL 3.0 | CXL 4.0 |

|---|---|---|---|---|

| Maximum band | up to 32 GT/s | up to 32 GT/s | up to 64 GT/s | 128 GT/s |

| Base PCIe | PCIe 5.0 | PCIe 5.0 | PCIe 6.0 | PCIe 7.0 |

| flit format | no fixed flit | no fixed flit | 256 Bytes | 256 Bytes mantido |

| Memory/RAS | simple expansion | adds pooling | Advanced management and improved coherence | Improved RAS, greater error visibility, x2 mode |

| Fabric/Interconnect | point to point | switches e pooling | multilevel topologies | Bundled Ports, up to 4 retimers, greater range |

| Compatibility | just 1.1 | compatible with 1.1 | compatible with 2.0 and 1.x | Compatible with 3.x, 2.0 and 1.x |

Additionally, the new specification integrates support for multilevel switchingexpanding architecture to denser topologies.

Advancement Refines Modern Data Centers

With the multiplication of AI workloads and the need to transport large volumes of data between heterogeneous chips, CXL 4.0 arrives as a strategic piece in the construction of scalable servers.

The reliance on PCIe 7.0 also acts as an accelerator for the next generation of platforms, pushing OEMs and processor manufacturers toward faster upgrade cycles.

Also read:

Towards a more ambitious era of interconnection

The release of specification 4.0 does not close a cycle, but opens a race for solutions capable of absorbing this bandwidth and exploiting new coherent fabric logic.

Once PCIe 7.0 gains traction, CXL is likely to become the most visible link between CPU, AI accelerators, and increasingly distributed memory layers.

Fonte: Compute Express Link

Source: https://www.adrenaline.com.br/hardware/cxl-4-0-pcie-7-0-banda-larga-tecnologia/